Circles, I’m going in circles

AI Blog

Issue #30YouTube reaches out to record labels

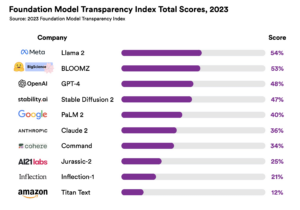

Open models are doing significantly better, but even in these there are still issues. For example in the data, labor, and compute part if we sum up the scores of the developers, the results are 20%, 17%, and 17% respectively. You call that extremely low.

Why is this a problem?

We need to understand that AI has tangible consequences in society and is primarily the domain of the research community. One of the key principles of which is transparency, so that other researchers can cross-check and provide meaningful critique.

The simplest example, and perhaps the one that is mentioned most of all, is the biases that exist in training data. It is almost impossible to avoid them completely, because humans are social and cultural products of our time. However, you can reduce them significantly if you make them public and thus allow multidisciplinary teams to check them. Whether an AI model reproduces biases can cause real-world problems, as we see in the learning bytes of the issue.

We talk about cycles in this issue, because everything is connected and we think it is especially important to be able to see the bigger picture.

Resources

AI Insider 📰

- Teleperformance launches its AI platform,TP Configuration, for faster and more efficient processes, aiming at better customer service.

- Apple announced that it will invest $1 billion per year in AI development for its products. Siri, Messages app and Apple Music seem to be among the first to receive the updates.

- Midjourney launched new upscalers. They are visible below every image that has been upscaled once already.

2x is subtle and tries to keep the details the same as much as possible.

4x costs about 3x the GPU minutes that the 2x upscaler uses.

It also launched the beta version of the new site. The plan is to replace the existing one, and there are discussions about being able to use to Midjourney-bot there rather than on discord.

- Bill Gates gave an interview to Handelsblatt, in which he said that he believes that AI models like the GPT have reached their peak for now.

- Instagram is testing a new ΑΙ feature that will allow users to make stickers from their photos.

Learning Bytes 🧐

- New research has shown that the most well-known AI chatbots (ChatGPT, Bard, etc.) tend to reproduce incorrect or refuted information when asked medical questions related to race. The research highlights the huge importance of bias and misinformation in AI and the real consequences it can have.

- Apple cancelled Jon Stewart ‘s show “The Problem With Jon Stewart” (they must have said the same thing at the board meeting). The reason seems to be his views on AI & China and the discrepancy with Apple’s position on the issue.

- Research has shown that AI can make a significant contribution to Wikipedia, as it can “clean up” references by identifying problematic links and finding better sources. In almost half of the tests run AI found better citations

- New York City has launched the beta version of the MyCity Business Services chatbot. It is an AI-powered platform that will help residents by providing them with information to manage their business.

- While the actors are still on strike, some of them participated in Meta and Realeyes’ “emotion” research, which aims to build AI training datasets for the former’s avatars. The advertisement said that it’s not a strike, but the gig is directly related to some of the core issues of the strike, such as how actors’ likenesses will be used, the fact that they have to be paid for it and what informed consent means. And that last one is extremely important, because even though “their individual likeness will not be used for any commercial purposes” it seems that the actors who signed the research agreements:

“have authorized Realeyes, Meta, and other parties of the two companies’ choosing to access and use not just their faces but also their expressions, and anything derived from them, almost however and whenever they want—as long as they do not reproduce any individual likenesses.” - Someone more astute than us would say that companies are trying to take advantage of the situation (strike going on, lack of rules & laws, actors in need of money) to collect as much training data as they can. Meanwhile alongside all this mess, Hollywood is asking background actors to get body scans, without specifying how they will be used. According to SAG reps, once the studios capture the actor’s likeness, they retain the copyright to it, and can use it indefinitely.

Cool Finds 🤯

See you next week! 💚

#TogetherWeAI

55

Social Media Awards

247

E-volution Awards

29

Peak Performance Marketing Awards

1

Effie Awards